Classes | |

| struct | BUFFER_INFO |

| struct | EVENT_DEFRAG_BUFFER |

Macros | |

| #define | MAX_DEFRAG_EVENTS 10 |

Functions | |

| INT | bm_match_event (short int event_id, short int trigger_mask, const EVENT_HEADER *pevent) |

| void | bm_remove_client_locked (BUFFER_HEADER *pheader, int j) |

| static void | bm_cleanup_buffer_locked (BUFFER *pbuf, const char *who, DWORD actual_time) |

| static void | bm_update_last_activity (DWORD millitime) |

| static BOOL | bm_validate_rp (const char *who, const BUFFER_HEADER *pheader, int rp) |

| static int | bm_incr_rp_no_check (const BUFFER_HEADER *pheader, int rp, int total_size) |

| static int | bm_next_rp (const char *who, const BUFFER_HEADER *pheader, const char *pdata, int rp) |

| static int | bm_validate_buffer_locked (const BUFFER *pbuf) |

| static void | bm_reset_buffer_locked (BUFFER *pbuf) |

| static void | bm_clear_buffer_statistics (HNDLE hDB, BUFFER *pbuf) |

| static void | bm_write_buffer_statistics_to_odb_copy (HNDLE hDB, const char *buffer_name, const char *client_name, int client_index, BUFFER_INFO *pbuf, BUFFER_HEADER *pheader) |

| static void | bm_write_buffer_statistics_to_odb (HNDLE hDB, BUFFER *pbuf, BOOL force) |

| INT | bm_open_buffer (const char *buffer_name, INT buffer_size, INT *buffer_handle) |

| INT | bm_get_buffer_handle (const char *buffer_name, INT *buffer_handle) |

| INT | bm_close_buffer (INT buffer_handle) |

| INT | bm_close_all_buffers (void) |

| INT | bm_write_statistics_to_odb (void) |

| INT | bm_set_cache_size (INT buffer_handle, size_t read_size, size_t write_size) |

| INT | bm_compose_event (EVENT_HEADER *event_header, short int event_id, short int trigger_mask, DWORD data_size, DWORD serial) |

| INT | bm_compose_event_threadsafe (EVENT_HEADER *event_header, short int event_id, short int trigger_mask, DWORD data_size, DWORD *serial) |

| INT | bm_add_event_request (INT buffer_handle, short int event_id, short int trigger_mask, INT sampling_type, EVENT_HANDLER *func, INT request_id) |

| INT | bm_request_event (HNDLE buffer_handle, short int event_id, short int trigger_mask, INT sampling_type, HNDLE *request_id, EVENT_HANDLER *func) |

| INT | bm_remove_event_request (INT buffer_handle, INT request_id) |

| INT | bm_delete_request (INT request_id) |

| static void | bm_validate_client_pointers_locked (const BUFFER_HEADER *pheader, BUFFER_CLIENT *pclient) |

| static BOOL | bm_update_read_pointer_locked (const char *caller_name, BUFFER_HEADER *pheader) |

| static void | bm_wakeup_producers_locked (const BUFFER_HEADER *pheader, const BUFFER_CLIENT *pc) |

| static void | bm_dispatch_event (int buffer_handle, EVENT_HEADER *pevent) |

| static void | bm_incr_read_cache_locked (BUFFER *pbuf, int total_size) |

| static BOOL | bm_peek_read_cache_locked (BUFFER *pbuf, EVENT_HEADER **ppevent, int *pevent_size, int *ptotal_size) |

| static int | bm_peek_buffer_locked (BUFFER *pbuf, BUFFER_HEADER *pheader, BUFFER_CLIENT *pc, EVENT_HEADER **ppevent, int *pevent_size, int *ptotal_size) |

| static void | bm_read_from_buffer_locked (const BUFFER_HEADER *pheader, int rp, char *buf, int event_size) |

| static void | bm_read_from_buffer_locked (const BUFFER_HEADER *pheader, int rp, std::vector< char > *vecptr, int event_size) |

| static BOOL | bm_check_requests (const BUFFER_CLIENT *pc, const EVENT_HEADER *pevent) |

| static int | bm_wait_for_more_events_locked (bm_lock_buffer_guard &pbuf_guard, BUFFER_CLIENT *pc, int timeout_msec, BOOL unlock_read_cache) |

| static int | bm_fill_read_cache_locked (bm_lock_buffer_guard &pbuf_guard, int timeout_msec) |

| static void | bm_convert_event_header (EVENT_HEADER *pevent, int convert_flags) |

| static int | bm_wait_for_free_space_locked (bm_lock_buffer_guard &pbuf_guard, int timeout_msec, int requested_space, bool unlock_write_cache) |

| static void | bm_write_to_buffer_locked (BUFFER_HEADER *pheader, int sg_n, const char *const sg_ptr[], const size_t sg_len[], size_t total_size) |

| static int | bm_find_first_request_locked (BUFFER_CLIENT *pc, const EVENT_HEADER *pevent) |

| static void | bm_notify_reader_locked (BUFFER_HEADER *pheader, BUFFER_CLIENT *pc, int old_write_pointer, int request_id) |

| INT | bm_send_event (INT buffer_handle, const EVENT_HEADER *pevent, int unused, int timeout_msec) |

| int | bm_send_event_vec (int buffer_handle, const std::vector< char > &event, int timeout_msec) |

| int | bm_send_event_vec (int buffer_handle, const std::vector< std::vector< char >> &event, int timeout_msec) |

| static INT | bm_flush_cache_locked (bm_lock_buffer_guard &pbuf_guard, int timeout_msec) |

| int | bm_send_event_sg (int buffer_handle, int sg_n, const char *const sg_ptr[], const size_t sg_len[], int timeout_msec) |

| static int | bm_flush_cache_rpc (int buffer_handle, int timeout_msec) |

| INT | bm_flush_cache (int buffer_handle, int timeout_msec) |

| static INT | bm_read_buffer (BUFFER *pbuf, INT buffer_handle, void **bufptr, void *buf, INT *buf_size, std::vector< char > *vecptr, int timeout_msec, int convert_flags, BOOL dispatch) |

| static INT | bm_receive_event_rpc (INT buffer_handle, void *buf, int *buf_size, EVENT_HEADER **ppevent, std::vector< char > *pvec, int timeout_msec) |

| INT | bm_receive_event (INT buffer_handle, void *destination, INT *buf_size, int timeout_msec) |

| INT | bm_receive_event_alloc (INT buffer_handle, EVENT_HEADER **ppevent, int timeout_msec) |

| INT | bm_receive_event_vec (INT buffer_handle, std::vector< char > *pvec, int timeout_msec) |

| static int | bm_skip_event (BUFFER *pbuf) |

| INT | bm_skip_event (INT buffer_handle) |

| static INT | bm_push_buffer (BUFFER *pbuf, int buffer_handle) |

| INT | bm_check_buffers () |

| INT | bm_poll_event () |

| INT | bm_empty_buffers () |

| static INT | bm_push_event (const char *buffer_name) |

Variables | |

| static DWORD | _bm_max_event_size = 0 |

| static int | _bm_lock_timeout = 5 * 60 * 1000 |

| static double | _bm_mutex_timeout_sec = _bm_lock_timeout/1000 + 15.000 |

| static EVENT_DEFRAG_BUFFER | defrag_buffer [MAX_DEFRAG_EVENTS] |

Detailed Description

dox dox

Macro Definition Documentation

◆ MAX_DEFRAG_EVENTS

Function Documentation

◆ bm_add_event_request()

| INT bm_add_event_request | ( | INT | buffer_handle, |

| short int | event_id, | ||

| short int | trigger_mask, | ||

| INT | sampling_type, | ||

| EVENT_HANDLER * | func, | ||

| INT | request_id | ||

| ) |

dox

Definition at line 8279 of file midas.cxx.

◆ bm_check_buffers()

| INT bm_check_buffers | ( | void | ) |

Check if any requested event is waiting in a buffer

- Returns

- TRUE More events are waiting

FALSE No more events are waiting

Definition at line 10921 of file midas.cxx.

◆ bm_check_requests()

|

static |

Definition at line 8933 of file midas.cxx.

◆ bm_cleanup_buffer_locked()

Check all clients on buffer, remove invalid clients

Definition at line 6029 of file midas.cxx.

◆ bm_clear_buffer_statistics()

Definition at line 6372 of file midas.cxx.

◆ bm_close_all_buffers()

| INT bm_close_all_buffers | ( | void | ) |

◆ bm_close_buffer()

Closes an event buffer previously opened with bm_open_buffer().

- Parameters

-

buffer_handle buffer handle

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE

Definition at line 7060 of file midas.cxx.

◆ bm_compose_event()

| INT bm_compose_event | ( | EVENT_HEADER * | event_header, |

| short int | event_id, | ||

| short int | trigger_mask, | ||

| DWORD | data_size, | ||

| DWORD | serial | ||

| ) |

Compose a Midas event header. An event header can usually be set-up manually or through this routine. If the data size of the event is not known when the header is composed, it can be set later with event_header->data-size = <...> Following structure is created at the beginning of an event

- Parameters

-

event_header pointer to the event header event_id event ID of the event trigger_mask trigger mask of the event data_size size if the data part of the event in bytes serial serial number

- Returns

- BM_SUCCESS

Definition at line 8246 of file midas.cxx.

◆ bm_compose_event_threadsafe()

| INT bm_compose_event_threadsafe | ( | EVENT_HEADER * | event_header, |

| short int | event_id, | ||

| short int | trigger_mask, | ||

| DWORD | data_size, | ||

| DWORD * | serial | ||

| ) |

◆ bm_convert_event_header()

|

static |

Definition at line 9036 of file midas.cxx.

◆ bm_delete_request()

Deletes an event request previously done with bm_request_event(). When an event request gets deleted, events of that requested type are not received any more. When a buffer is closed via bm_close_buffer(), all event requests from that buffer are deleted automatically

- Parameters

-

request_id request identifier given by bm_request_event()

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE

Definition at line 8551 of file midas.cxx.

◆ bm_dispatch_event()

|

static |

Definition at line 8790 of file midas.cxx.

◆ bm_empty_buffers()

| INT bm_empty_buffers | ( | void | ) |

Clears event buffer and cache. If an event buffer is large and a consumer is slow in analyzing events, events are usually received some time after they are produced. This effect is even more experienced if a read cache is used (via bm_set_cache_size()). When changes to the hardware are made in the experience, the consumer will then still analyze old events before any new event which reflects the hardware change. Users can be fooled by looking at histograms which reflect the hardware change many seconds after they have been made.

To overcome this potential problem, the analyzer can call bm_empty_buffers() just after the hardware change has been made which skips all old events contained in event buffers and read caches. Technically this is done by forwarding the read pointer of the client. No events are really deleted, they are still visible to other clients like the logger.

Note that the front-end also contains write buffers which can delay the delivery of events. The standard front-end framework mfe.c reduces this effect by flushing all buffers once every second.

- Returns

- BM_SUCCESS

Definition at line 11207 of file midas.cxx.

◆ bm_fill_read_cache_locked()

|

static |

Definition at line 8959 of file midas.cxx.

◆ bm_find_first_request_locked()

|

static |

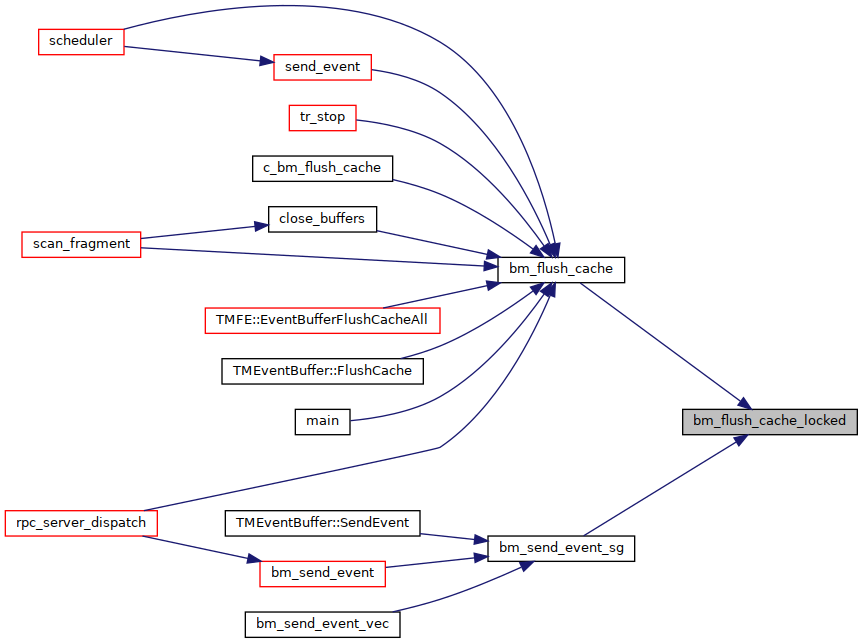

◆ bm_flush_cache()

| INT bm_flush_cache | ( | int | buffer_handle, |

| int | timeout_msec | ||

| ) |

Definition at line 10174 of file midas.cxx.

◆ bm_flush_cache_locked()

|

static |

Empty write cache. This function should be used if events in the write cache should be visible to the consumers immediately. It should be called at the end of each run, otherwise events could be kept in the write buffer and will flow to the data of the next run.

- Parameters

-

buffer_handle Buffer handle obtained via bm_open_buffer() or 0 to flush data in the mserver event socket timeout_msec Timeout waiting for free space in the event buffer. If BM_WAIT, wait forever. If BM_NO_WAIT, the function returns immediately with a value of BM_ASYNC_RETURN without writing the cache.

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE

BM_ASYNC_RETURN Routine called with async_flag == BM_NO_WAIT and buffer has not enough space to receive cache

BM_NO_MEMORY Event is too large for network buffer or event buffer. One has to increase the event buffer size "/Experiment/Buffer sizes/SYSTEM" and/or /Experiment/MAX_EVENT_SIZE in ODB.

Definition at line 10030 of file midas.cxx.

◆ bm_flush_cache_rpc()

|

static |

◆ bm_get_buffer_handle()

◆ bm_incr_read_cache_locked()

|

static |

◆ bm_incr_rp_no_check()

|

static |

◆ bm_match_event()

| INT bm_match_event | ( | short int | event_id, |

| short int | trigger_mask, | ||

| const EVENT_HEADER * | pevent | ||

| ) |

Check if an event matches a given event request by the event id and trigger mask

- Parameters

-

event_id Event ID of request trigger_mask Trigger mask of request pevent Pointer to event to check

- Returns

- TRUE if event matches request

Definition at line 5978 of file midas.cxx.

◆ bm_next_rp()

|

static |

Definition at line 6220 of file midas.cxx.

◆ bm_notify_reader_locked()

|

static |

Definition at line 9570 of file midas.cxx.

◆ bm_open_buffer()

Open an event buffer. Two default buffers are created by the system. The "SYSTEM" buffer is used to exchange events and the "SYSMSG" buffer is used to exchange system messages. The name and size of the event buffers is defined in midas.h as EVENT_BUFFER_NAME and DEFAULT_BUFFER_SIZE. Following example opens the "SYSTEM" buffer, requests events with ID 1 and enters a main loop. Events are then received in process_event()

- Parameters

-

buffer_name Name of buffer buffer_size Default size of buffer in bytes. Can by overwritten with ODB value buffer_handle Buffer handle returned by function

- Returns

- BM_SUCCESS, BM_CREATED

BM_NO_SHM Shared memory cannot be created

BM_NO_SEMAPHORE Semaphore cannot be created

BM_NO_MEMORY Not enough memory to create buffer descriptor

BM_MEMSIZE_MISMATCH Buffer size conflicts with an existing buffer of different size

BM_INVALID_PARAM Invalid parameter

Definition at line 6681 of file midas.cxx.

◆ bm_peek_buffer_locked()

|

static |

Definition at line 8858 of file midas.cxx.

◆ bm_peek_read_cache_locked()

|

static |

◆ bm_poll_event()

| INT bm_poll_event | ( | void | ) |

Definition at line 11093 of file midas.cxx.

◆ bm_push_buffer()

Check a buffer if an event is available and call the dispatch function if found.

- Parameters

-

buffer_name Name of buffer

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE, BM_TRUNCATED, BM_ASYNC_RETURN, RPC_NET_ERROR

Definition at line 10869 of file midas.cxx.

◆ bm_push_event()

|

static |

Check a buffer if an event is available and call the dispatch function if found.

- Parameters

-

buffer_name Name of buffer

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE, BM_TRUNCATED, BM_ASYNC_RETURN, BM_CORRUPTED, RPC_NET_ERROR

Definition at line 10885 of file midas.cxx.

◆ bm_read_buffer()

|

static |

Definition at line 10231 of file midas.cxx.

◆ bm_read_from_buffer_locked() [1/2]

|

static |

◆ bm_read_from_buffer_locked() [2/2]

|

static |

◆ bm_receive_event()

Receives events directly. This function is an alternative way to receive events without a main loop.

It can be used in analysis systems which actively receive events, rather than using callbacks. A analysis package could for example contain its own command line interface. A command like "receive 1000 events" could make it necessary to call bm_receive_event() 1000 times in a row to receive these events and then return back to the command line prompt. The according bm_request_event() call contains NULL as the callback routine to indicate that bm_receive_event() is called to receive events.

- Parameters

-

buffer_handle buffer handle destination destination address where event is written to buf_size size of destination buffer on input, size of event plus header on return. timeout_msec Wait so many millisecond for new data. Special values: BM_WAIT: wait forever, BM_NO_WAIT: do not wait, return BM_ASYNC_RETURN if no data is immediately available

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE

BM_TRUNCATED The event is larger than the destination buffer and was therefore truncated

BM_ASYNC_RETURN No event available

Definition at line 10617 of file midas.cxx.

◆ bm_receive_event_alloc()

| INT bm_receive_event_alloc | ( | INT | buffer_handle, |

| EVENT_HEADER ** | ppevent, | ||

| int | timeout_msec | ||

| ) |

Receives events directly. This function is an alternative way to receive events without a main loop.

It can be used in analysis systems which actively receive events, rather than using callbacks. A analysis package could for example contain its own command line interface. A command like "receive 1000 events" could make it necessary to call bm_receive_event() 1000 times in a row to receive these events and then return back to the command line prompt. The according bm_request_event() call contains NULL as the callback routine to indicate that bm_receive_event() is called to receive events.

- Parameters

-

buffer_handle buffer handle ppevent pointer to the received event pointer, event pointer should be free()ed to avoid memory leak timeout_msec Wait so many millisecond for new data. Special values: BM_WAIT: wait forever, BM_NO_WAIT: do not wait, return BM_ASYNC_RETURN if no data is immediately available

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE

BM_ASYNC_RETURN No event available

Definition at line 10698 of file midas.cxx.

◆ bm_receive_event_rpc()

|

static |

Definition at line 10450 of file midas.cxx.

◆ bm_receive_event_vec()

Receives events directly. This function is an alternative way to receive events without a main loop.

It can be used in analysis systems which actively receive events, rather than using callbacks. A analysis package could for example contain its own command line interface. A command like "receive 1000 events" could make it necessary to call bm_receive_event() 1000 times in a row to receive these events and then return back to the command line prompt. The according bm_request_event() call contains NULL as the callback routine to indicate that bm_receive_event() is called to receive events.

- Parameters

-

buffer_handle buffer handle ppevent pointer to the received event pointer, event pointer should be free()ed to avoid memory leak timeout_msec Wait so many millisecond for new data. Special values: BM_WAIT: wait forever, BM_NO_WAIT: do not wait, return BM_ASYNC_RETURN if no data is immediately available

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE

BM_ASYNC_RETURN No event available

Definition at line 10776 of file midas.cxx.

◆ bm_remove_client_locked()

| void bm_remove_client_locked | ( | BUFFER_HEADER * | pheader, |

| int | j | ||

| ) |

◆ bm_remove_event_request()

Delete a previously placed request for a specific event type in the client structure of the buffer refereced by buffer_handle.

- Parameters

-

buffer_handle Handle to the buffer where the re- quest should be placed in request_id Request id returned by bm_request_event

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE, BM_NOT_FOUND, RPC_NET_ERROR

Definition at line 8484 of file midas.cxx.

◆ bm_request_event()

| INT bm_request_event | ( | HNDLE | buffer_handle, |

| short int | event_id, | ||

| short int | trigger_mask, | ||

| INT | sampling_type, | ||

| HNDLE * | request_id, | ||

| EVENT_HANDLER * | func | ||

| ) |

dox Place an event request based on certain characteristics. Multiple event requests can be placed for each buffer, which are later identified by their request ID. They can contain different callback routines. Example see bm_open_buffer() and bm_receive_event()

- Parameters

-

buffer_handle buffer handle obtained via bm_open_buffer() event_id event ID for requested events. Use EVENTID_ALL to receive events with any ID. trigger_mask trigger mask for requested events. The requested events must have at least one bit in its trigger mask common with the requested trigger mask. Use TRIGGER_ALL to receive events with any trigger mask. sampling_type specifies how many events to receive. A value of GET_ALL receives all events which match the specified event ID and trigger mask. If the events are consumed slower than produced, the producer is automatically slowed down. A value of GET_NONBLOCKING receives as much events as possible without slowing down the producer. GET_ALL is typically used by the logger, while GET_NONBLOCKING is typically used by analyzers. request_id request ID returned by the function. This ID is passed to the callback routine and must be used in the bm_delete_request() routine. func allback routine which gets called when an event of the specified type is received.

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE

BM_NO_MEMORY too many requests. The value MAX_EVENT_REQUESTS in midas.h should be increased.

Definition at line 8431 of file midas.cxx.

◆ bm_reset_buffer_locked()

|

static |

◆ bm_send_event()

| INT bm_send_event | ( | INT | buffer_handle, |

| const EVENT_HEADER * | pevent, | ||

| int | unused, | ||

| int | timeout_msec | ||

| ) |

Definition at line 9645 of file midas.cxx.

◆ bm_send_event_sg()

| int bm_send_event_sg | ( | int | buffer_handle, |

| int | sg_n, | ||

| const char *const | sg_ptr[], | ||

| const size_t | sg_len[], | ||

| int | timeout_msec | ||

| ) |

Sends an event to a buffer. This function check if the buffer has enough space for the event, then copies the event to the buffer in shared memory. If clients have requests for the event, they are notified via an UDP packet.

- Parameters

-

buffer_handle Buffer handle obtained via bm_open_buffer() source Address of event buffer buf_size Size of event including event header in bytes timeout_msec Timeout waiting for free space in the event buffer. If BM_WAIT, wait forever. If BM_NO_WAIT, the function returns immediately with a value of BM_ASYNC_RETURN without writing the event to the buffer

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE, BM_INVALID_PARAM

BM_ASYNC_RETURN Routine called with timeout_msec == BM_NO_WAIT and buffer has not enough space to receive event

BM_NO_MEMORY Event is too large for network buffer or event buffer. One has to increase the event buffer size "/Experiment/Buffer sizes/SYSTEM" and/or /Experiment/MAX_EVENT_SIZE in ODB.

Definition at line 9745 of file midas.cxx.

◆ bm_send_event_vec() [1/2]

| int bm_send_event_vec | ( | int | buffer_handle, |

| const std::vector< char > & | event, | ||

| int | timeout_msec | ||

| ) |

◆ bm_send_event_vec() [2/2]

| int bm_send_event_vec | ( | int | buffer_handle, |

| const std::vector< std::vector< char >> & | event, | ||

| int | timeout_msec | ||

| ) |

◆ bm_set_cache_size()

Modifies buffer cache size. Without a buffer cache, events are copied to/from the shared memory event by event.

To protect processed from accessing the shared memory simultaneously, semaphores are used. Since semaphore operations are CPU consuming (typically 50-100us) this can slow down the data transfer especially for small events. By using a cache the number of semaphore operations is reduced dramatically. Instead writing directly to the shared memory, the events are copied to a local cache buffer. When this buffer is full, it is copied to the shared memory in one operation. The same technique can be used when receiving events.

The drawback of this method is that the events have to be copied twice, once to the cache and once from the cache to the shared memory. Therefore it can happen that the usage of a cache even slows down data throughput on a given environment (computer type, OS type, event size). The cache size has therefore be optimized manually to maximize data throughput.

- Parameters

-

buffer_handle buffer handle obtained via bm_open_buffer() read_size cache size for reading events in bytes, zero for no cache write_size cache size for writing events in bytes, zero for no cache

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE, BM_NO_MEMORY, BM_INVALID_PARAM

Definition at line 8105 of file midas.cxx.

◆ bm_skip_event() [1/2]

|

static |

◆ bm_skip_event() [2/2]

Skip all events in current buffer.

Useful for single event displays to see the newest events

- Parameters

-

buffer_handle Handle of the buffer. Must be obtained via bm_open_buffer.

- Returns

- BM_SUCCESS, BM_INVALID_HANDLE, RPC_NET_ERROR

Definition at line 10841 of file midas.cxx.

◆ bm_update_last_activity()

|

static |

◆ bm_update_read_pointer_locked()

|

static |

Definition at line 8687 of file midas.cxx.

◆ bm_validate_buffer_locked()

|

static |

Definition at line 6271 of file midas.cxx.

◆ bm_validate_client_pointers_locked()

|

static |

Definition at line 8589 of file midas.cxx.

◆ bm_validate_rp()

|

static |

Definition at line 6153 of file midas.cxx.

◆ bm_wait_for_free_space_locked()

|

static |

- signal other clients wait mode */

- validate client index: we could have been removed from the buffer */

Definition at line 9047 of file midas.cxx.

◆ bm_wait_for_more_events_locked()

|

static |

Definition at line 9359 of file midas.cxx.

◆ bm_wakeup_producers_locked()

|

static |

Definition at line 8754 of file midas.cxx.

◆ bm_write_buffer_statistics_to_odb()

Definition at line 6550 of file midas.cxx.

◆ bm_write_buffer_statistics_to_odb_copy()

|

static |

Definition at line 6428 of file midas.cxx.

◆ bm_write_statistics_to_odb()

| INT bm_write_statistics_to_odb | ( | void | ) |

◆ bm_write_to_buffer_locked()

|

static |

Definition at line 9471 of file midas.cxx.

Variable Documentation

◆ _bm_lock_timeout

◆ _bm_max_event_size

◆ _bm_mutex_timeout_sec

|

static |

◆ defrag_buffer

|

static |